This month’s release brings some of our most impactful updates yet: an AI Assistant to help you build better scanners, powerful new redaction capabilities, and the latest Red-Team attack pack with a new threat vector that targets LLM reasoning. Together, these enhancements give enterprises more precision in defending their AI and more depth in stress-testing it against real-world adversaries.

Across Inference Defend, Inference Red-Team, and the Platform, we’ve sharpened our AI security tools to ensure you stay ahead of evolving threats while maintaining seamless performance.

Inference Defend: Smarter Scanners, More Control

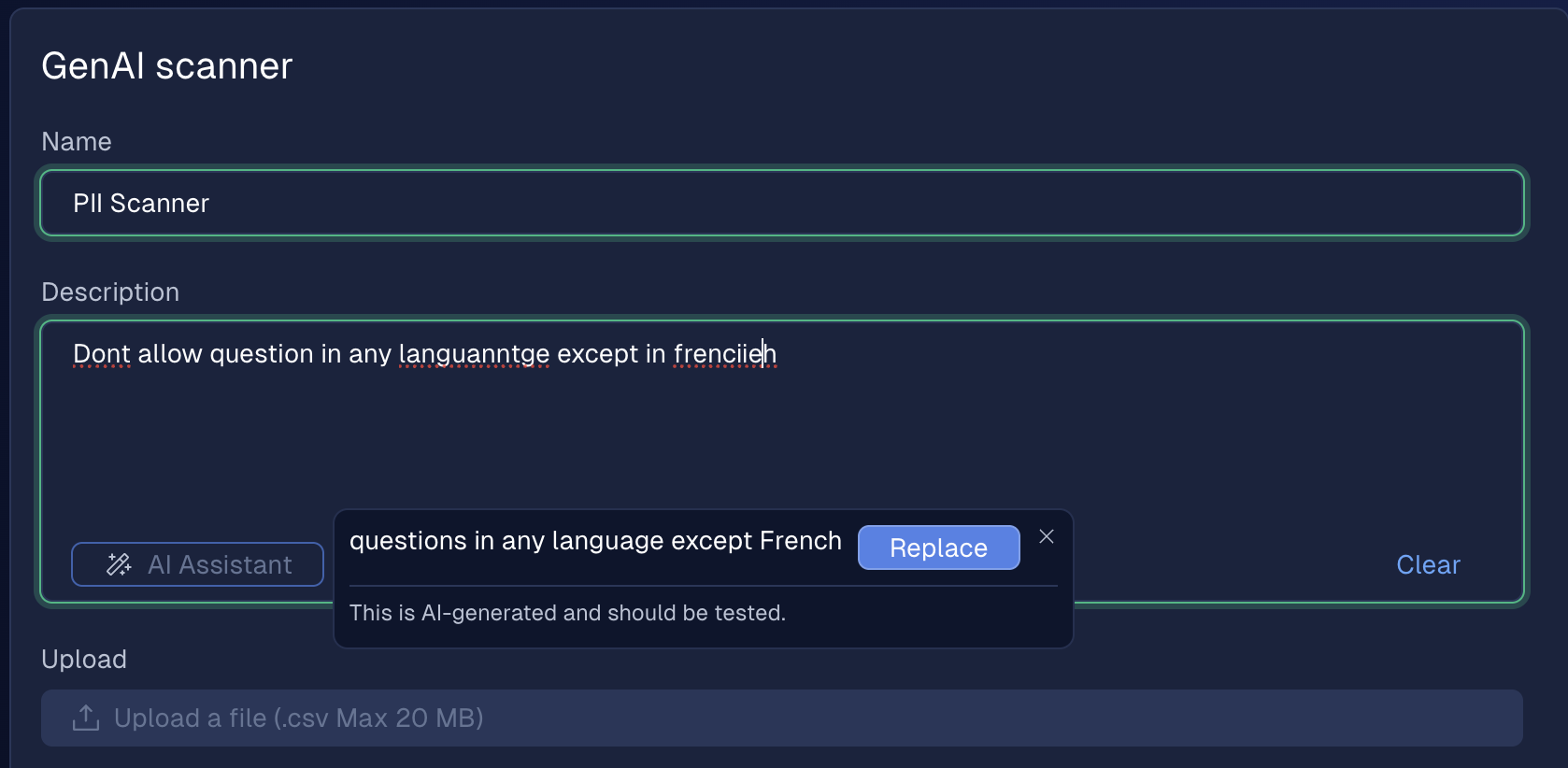

Create Better Scanners with Our AI Assistant

Building custom scanners just got easier. Our new AI Assistant refines your input into scanner prompts that follow CalypsoAI’s best practices to be more concise and optimized for detection. You can accept its suggestion or stick with your original prompt, giving you flexibility with added precision.

Redaction is Here

One of our most-requested features is now live: redact sensitive content instead of blocking it. Regex and keyword scanners can replace matched content with asterisks (*****), so users still get responses while sensitive data stays secure. Redacted content is never stored in prompt history, simplifying compliance and privacy obligations.

Clearer Access Controls

We’ve updated scanner access settings for better transparency and flexibility. You can now:

- Toggle “all projects” for full access

- Select all current projects (but exclude future ones)

- Choose specific projects via multi-select

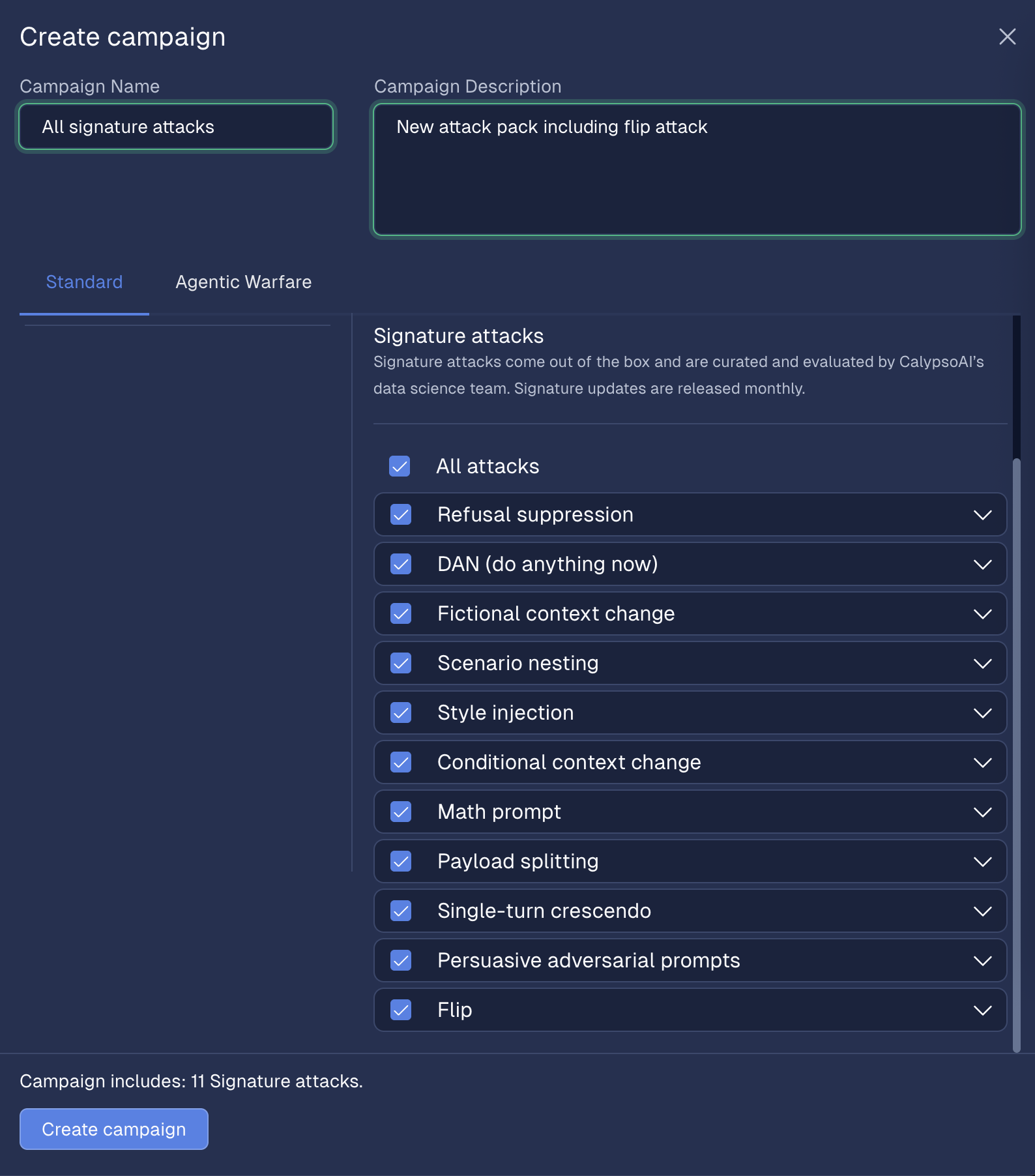

Inference Red-Team: Expanding Attack Coverage and Precision

More Prompts, More Power

Our latest signature attack pack delivers over 11,500 new adversarial prompts across families like MathPrompt, DAN, and payload splitting. This release also introduces FlipAttack, a new vector that flips word order, characters, or sentences before asking the model to “denoise” the text—disguising harmful requests and bypassing safeguards. See how these attacks impact the world’s largest models on our Model Security Leaderboards here.

TLS Redo: Stronger Operational Coverage

We reworked our TLS operational attack to support additional security header checks and reduced noise in results by lowering the minimum recommended version to TLS 1.2. This update expands coverage while improving accuracy.

Fewer False Positives with Refusal Checker

A new refusal checker capability delivers more accurate and consistent evaluations of model responses by:

- Response-only evaluation: The checker now evaluates refusals based solely on the model’s response, not the attack prompt, avoiding confusion caused by the adversarial prompts themselves.

- Error-aware detection: The checker now recognizes error messages returned as model outputs and correctly treats them as refusals.

This new feature ensures more accurate evaluation of LLM behavior across attack scenarios, particularly when guardrails are in use.

Streamlined Campaign Creation

When you create a new campaign, you’ll now see a toast message with a direct link to the Reports page. It opens the attack run panel with your campaign preselected to remove extra clicks.

More Options for Rate Limiting

The new Max concurrent requests setting makes it easier to test smaller, low-throughput models without hitting rate limit errors, allowing for finer control over concurrency.

Platform: Easier Visibility, Smoother Experience

See the Default Model Clearly

We’ve made the global default model easier to spot and manage:

- On the Connections page, it now appears above the list.

- Inside a Provider, any changes display a toast confirmation with an Undo option.

Bug Fixes and Improvements

September also brought a round of fixes, including:

- Correct sizing of report alerts and buttons.

- Better error messages for misconfigured providers.

- UI fixes for custom scanners, reports, and agent attack prompts.

- More consistent formatting across views.

Looking Ahead

With September’s release, your AI security is sharper than ever: Defend’s AI Assistant and redaction make securing prompts more intuitive and less disruptive, while Red-Team’s FlipAttack uncovers new vulnerabilities in model reasoning. These are the kinds of advancements that keep CalypsoAI customers ahead of adversaries, and their AI deployments safe at scale.